Welcome, AI Enthusiasts.

Quantization, a method to reduce AI model size by lowering data precision, has limits that impact performance, especially for models trained on extensive datasets.

Norwegian startup Factiverse aims to combat disinformation using a business-to-business AI tool that provides live fact-checking for text, video, and audio.

In today’s issue:

🤖 AI Efficency

🦾 FActiverse

🛠️ AI Products

🥋 AI Dojo

🤖 3 Quick AI updates

Read time: 8 minutes.

LATEST HIGHLIGHTS

Image source: Ideogram

To recap: Quantization, a method to reduce AI model size by lowering data precision, has limits that impact performance, especially for models trained on extensive datasets. While it reduces computational costs during inference, excessive precision reduction harms accuracy, as seen with Meta’s Llama 3. Researchers suggest strategies like training in lower precision and better data curation, but caution that such shortcuts have diminishing returns, underscoring the need for innovative AI architectures and training methods.

The details:

Quantization, a technique that reduces AI models' computational demands by lowering the precision of data representation, has significant limitations. While effective at cutting inference costs, it can degrade model performance, especially when applied to extensively trained, large-scale models. For instance, Meta’s Llama 3 exhibited quality issues after quantization due to its training approach.The study highlights that lowering precision beyond a certain point—typically below 7- or 8-bit—can lead to noticeable performance drops unless the model is exceptionally large. While quantization helps make AI models more efficient, it isn’t a universal solution, as models trained on massive datasets face diminishing returns when scaling up further.To address these challenges, researchers propose approaches like training models in low-precision formats or meticulously curating data for smaller, more efficient models. However, these methods come with their trade-offs, emphasizing the need for innovative architectures designed to handle low-precision training effectively. The research underscores that reducing inference costs has inherent limits and calls for a balanced approach to data, training, and precision.

Here is the key takeaway: Quantization effectively reduces AI model costs and computational demands, it has inherent limits. Excessive precision reduction can harm performance, particularly for large models trained on extensive datasets. This highlights the need for careful data curation, innovative training methods, and new architectures to balance efficiency and quality in AI development.

FACTIVERSE

Norwegian Startup Factiverse Leverages AI to Combat Disinformation

Image source: Factiverse

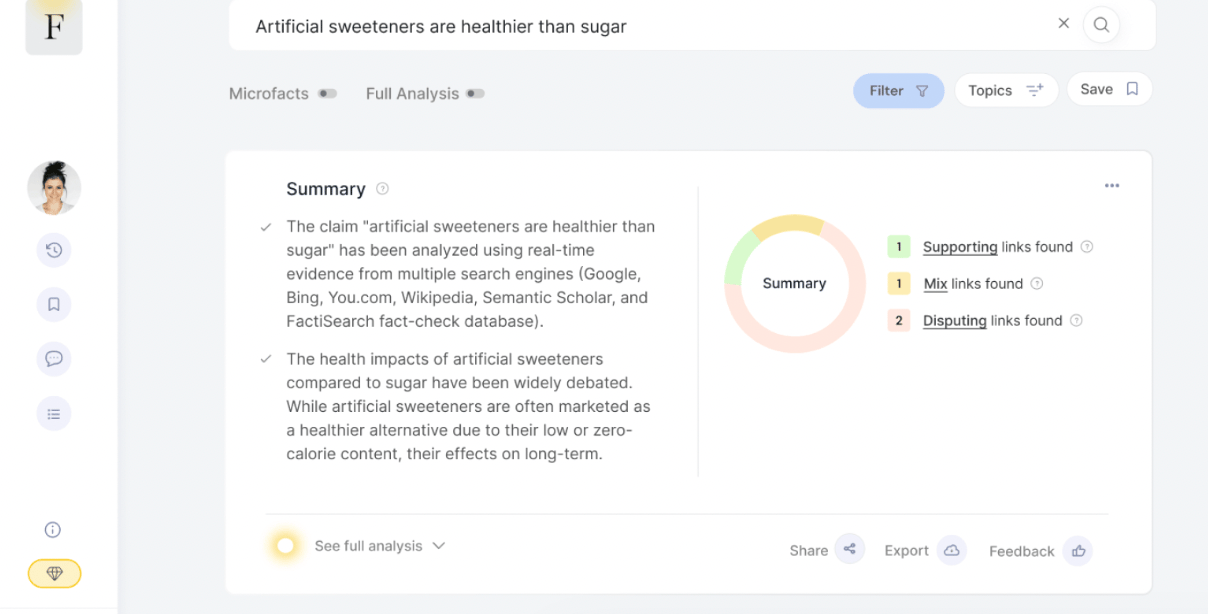

In Summary: Norwegian startup Factiverse aims to combat disinformation using a business-to-business AI tool that provides live fact-checking for text, video, and audio. Unlike generative AI, Factiverse’s model is built on credible, high-quality data and is designed to identify claims, verify their accuracy, and recommend reliable sources. The company has worked with media and financial partners, including providing live fact-checking for the U.S. presidential debates. Factiverse's model, trained with a focus on credibility and precision, outperforms major language models like GPT-4 in fact-checking capabilities. With $1.45 million in pre-seed funding, the startup plans to expand globally and raise a seed round in 2025.

Key points:

- Disinformation Problem: Disinformation, amplified by generative AI’s deepfakes and hallucinated facts, surged during the U.S. 2024 presidential election.

- Factiverse's Solution: Factiverse offers a B2B tool for live fact-checking of text, video, and audio, helping businesses save time and mitigate reputational or legal risks.

- Data and Model: Factiverse’s model is trained on credible, well-curated data instead of the less reliable data used by generative AI. It uses machine learning and natural language processing to identify claims, verify them, and recommend historically credible sources.

- Performance: Factiverse outperforms GPT-4, Mistral 7-b, and GPT-3 in identifying fact-check-worthy claims in 114 languages and determining claim veracity, with an 80% success rate.

- Current Progress: The startup has raised $1.45 million in pre-seed funding, collaborated with media and financial partners, and provided live fact-checking for the U.S. presidential debates.

- Future Plans: Factiverse aims to expand globally, improve its model’s accuracy, and raise a seed funding round in 2025. It seeks customers and investors committed to trust and credibility.

Our thoughts: Factiverse’s focus on live fact-checking using curated, credible data makes it a timely solution in an era of rampant disinformation, especially as generative AI amplifies the problem. Its B2B tool addresses key business needs like saving research time and reducing reputational risks, with claims of outperforming major models like GPT-4 in fact-checking. While their partnerships and real-world applications, such as fact-checking U.S. debates, build credibility, scaling and competing with tech giants will be challenging. Factiverse’s success hinges on delivering measurable ROI and proving its value as trust and credibility become paramount in AI-driven industries.

TRENDING TECHS

🛠 Flatirons Fuse- Intelligent CSV import tool for websites.

🧾 OpenTaskAI-Connecting AI freelancers to businesses globally!

🤖 Melior Contract Intelligence AI- AI Contract Intelligence, for managers, not just lawyers.

AI DOJO

ChatGPT

One of the new and noteworthy features of ChatGPT is its integration with memory, which enables it to remember past interactions and adapt its responses based on accumulated knowledge from previous conversations. This functionality allows ChatGPT to offer a more personalized experience by recalling details, preferences, or ongoing projects discussed across sessions, much like a human might remember and build on past discussions.

Here’s how it works in daily life:

1. Personalized Conversations: ChatGPT can now retain key pieces of information between chats. For example, if you tell it that you're working on a project or need reminders about specific tasks, it can recall those details in future interactions and help you stay on track. If you mentioned your favorite way to learn or your preferred writing style in a prior chat, ChatGPT can tailor its advice and responses accordingly without needing to be reminded each time.

2. Improved Context Understanding: With memory, ChatGPT can also better understand long-term goals and reference past exchanges to provide more contextually relevant advice. For instance, if you've asked it to help with a writing project, it could remember the tone or structure you've preferred and apply that to new prompts without needing a refresher.

3. Customizable AI Assistant: Users can manage what ChatGPT remembers. This means you can update, add, or delete memories, ensuring the assistant stays aligned with your needs and preferences. For example, if you start a new business or shift focus in your daily tasks, ChatGPT can be updated with that new information to offer more relevant guidance.

4. Practical Use Cases:

- Project Management: ChatGPT could track project milestones over time, offering reminders or suggestions based on what was discussed in past sessions. If you're working on a long-term strategy, it could recall specific objectives, deadlines, or resources you’ve mentioned.

- Learning and Development: For students or professionals learning new skills, ChatGPT can remember past queries about study topics, providing continued support or even suggesting further reading based on prior conversations.

- Health and Fitness: If you’re tracking your fitness progress or health goals with ChatGPT, it could recall previous conversations about your routine or any goals you set, offering tailored advice based on past discussions.

This feature of memory is still evolving, and users have control over it, including the ability to turn it off completely for privacy concerns. As this feature continues to develop, it could become an indispensable tool for more personalized AI interactions across various aspects of daily life, from work and education to health and personal growth.

QUICK BYTES

Robust AI's Carter Pro Robot: Built for Collaboration and Human Assistance

The Carter Pro robot from Robust AI is designed for safe collaboration with humans in industrial settings. Notably, humans can manually move the robot, with a handlebar for single-handed control, ensuring flexibility and safety when working alongside autonomous systems. Unlike many robots that rely on lidar, Carter uses cameras for navigation, which is becoming a more cost-effective and practical choice. Robust AI is targeting a diverse client base, with DHL as its first major partner, while ensuring it doesn't rely solely on any one customer to safeguard its future growth.

Microsoft Launches Carbon Removal Contest to Offset Growing AI Emissions

Microsoft, facing a 40% rise in emissions since 2020 due to its growing AI business, is turning to direct air capture (DAC) to meet its carbon-negative goal by 2030. In partnership with the Royal Bank of Canada, Microsoft is pre-purchasing 10,000 metric tons of carbon from Deep Sky, a DAC project hosting a competition among eight startups to determine the most efficient carbon removal method. The project, which will use shared solar power and a common storage site, is expected to begin operations by April, with carbon credits starting by June.

ChatGPT Can Now Access and Read Certain Desktop Apps on Your Mac

OpenAI's ChatGPT desktop app for macOS now integrates with coding apps like VS Code, Xcode, TextEdit, Terminal, and iTerm2, allowing developers to work more seamlessly with the AI by automatically sending sections of code as context. This feature, called "Work with Apps," still requires developers to manually paste ChatGPT's generated code into their environment, as it can't write directly into the apps. The functionality leverages macOS's accessibility API to read text, and OpenAI plans to expand it to more text-based apps in the future. This marks a step toward building more agent-like systems for AI.

SPONSOR US

🦾 Get your product in front of AI enthusiasts

THAT’S A WRAP